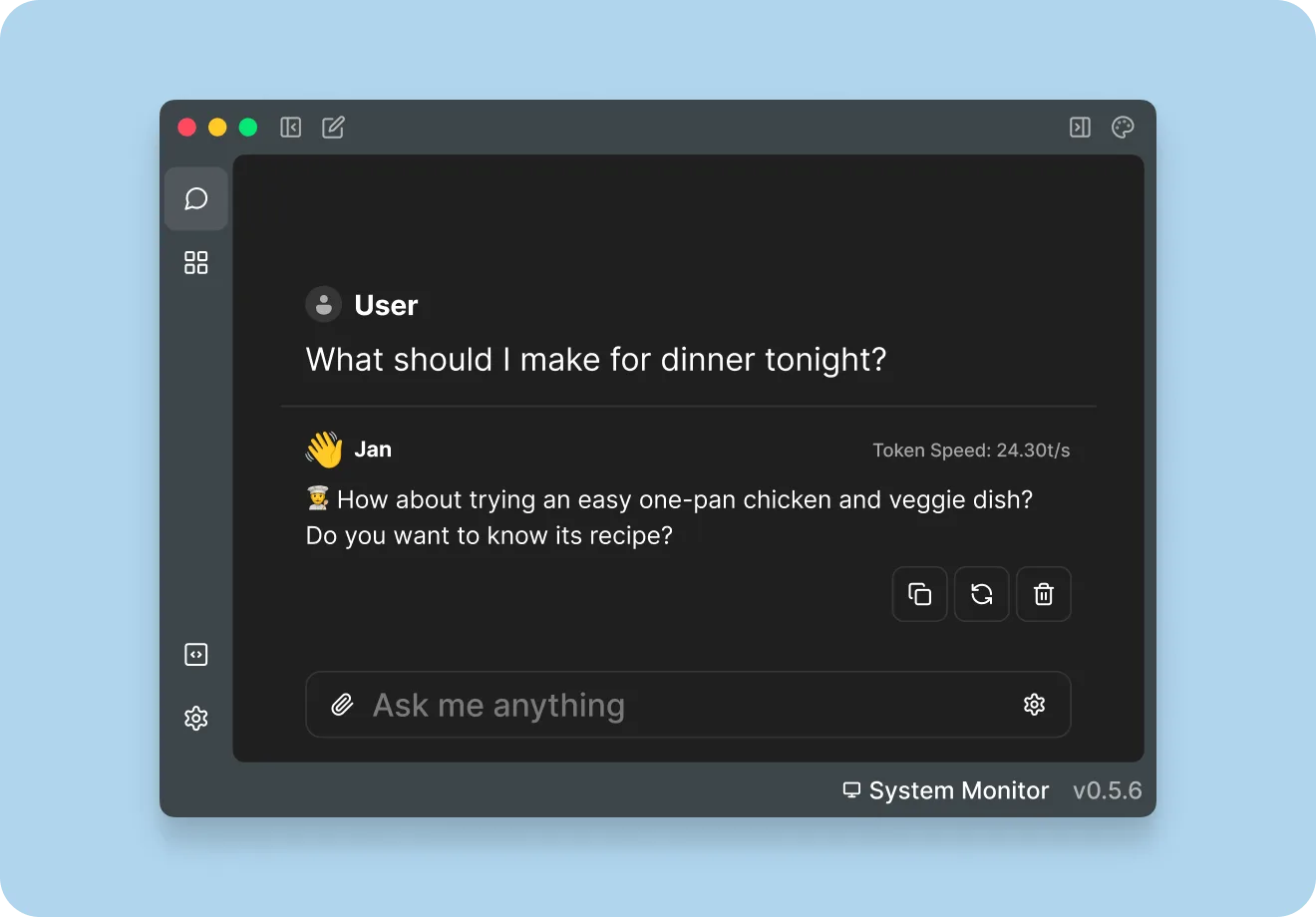

TL;DR: Jan is an open-source app that runs ChatGPT-like AIs 100% offline on your computer (PC, Mac, Linux). It’s a powerful, privacy-focused alternative to cloud services, perfect for developers and tech enthusiasts.

Tired of depending on OpenAI’s servers? Worried about what happens to your data when you chat with an AI? Or maybe, like me, you just love having full control over your tools. If any of that sounds familiar, then Jan is probably the solution you’ve been waiting for.

For the past few months, the local AI ecosystem has been booming. Jan, formerly known as LlamaGPT, has quickly established itself as a key player. In this article, we’ll break down what it is, how to install it, and most importantly, how it really stacks up against the competition. Buckle up, we’re going local.

🤔 What Is Jan AI? More Than Just a ChatGPT Alternative

Jan bills itself as “an open-source alternative to ChatGPT that runs 100% offline.” It’s a simple definition, but it hides a much greater ambition. Rather than just being a “wrapper” for language models, Jan is a true desktop platform for interacting with generative AIs, without ever sending your conversations to the cloud.

The 3 Pillars of Jan: 100% Local, Open-Source, and Extensible

To understand Jan, you need to remember three things:

- 100% Local: Everything—from the software to the language models and your conversations—stays on your machine. This is a guarantee of total confidentiality. No data leaks, no third-party analysis of your prompts.

- Open-Source: The code is available for everyone on GitHub. This is a pledge of transparency and security. Anyone can audit the code, modify it, and contribute to its improvement. That’s the power of community.

- Extensible: Jan is designed to be modular. It can not only serve as a chat interface but also as a local inference server, compatible with the OpenAI API. In short, you can use it as a backend for your own applications.

Who Is This Tool Really For?

While Jan can be used by anyone, it particularly shines for certain profiles:

- Developers: To prototype and build AI-based applications with no API fees and full control over the environment.

- Researchers and Students: To experiment with different models in a private and affordable way.

- Privacy-Conscious Users: For anyone who wants to leverage the power of LLMs without compromising their personal or professional data.

🚀 Installation and First Run: Your AI in 5 Minutes Flat

One of Jan’s biggest strengths is its simple installation. The team has done a great job of making the experience as smooth as possible.

Hardware Requirements: What Do You Need?

You don’t need a supercomputer, but you do need a decent machine.

- RAM: 16 GB is a comfortable minimum for running medium-sized models (7 billion parameters). With 32 GB or more, you’ll be much more comfortable with larger models.

- Storage: Plan for a few dozen GB of free space. Models are heavy (from 4 GB to over 70 GB).

- GPU (Optional but recommended): If you have a dedicated graphics card (preferably NVIDIA), performance will be significantly boosted. Otherwise, Jan will use your CPU, but it will be slower.

Installation Guide (Windows, macOS, Linux)

- Go to Jan’s official website.

- Download the installer for your operating system.

- Launch it and follow the instructions. It’s as simple as installing any other application.

First Contact: The Interface and Downloading Your First Model

Once Jan is launched, you’re greeted by a clean interface. On the left, your conversations. In the center, the chat area. But before we can chat, our AI needs a brain!

Head to the “Hub.” This is Jan’s model catalog. To start, I recommend a versatile model like Mistral 7B Instruct or Llama 3 8B Instruct. Click “Download” and wait. Once the download is complete, you can start your first conversation. That’s it!

Insight: GGUF, the Model Format to Know

You’ll often see the acronym GGUF. This is the file format used by most local AIs. Created by the team behind llama.cpp, it allows a model to be “quantized”—that is, to reduce its size and the resources needed to run it, often with minimal loss of precision. It’s thanks to this format that we can run such powerful AIs on personal computers.

🥊 Jan AI vs. The Competition: The Local AI Showdown

Jan isn’t alone in the ring. Its two main competitors are Ollama and LM Studio. So, which one should you choose?

| Criterion | Jan | Ollama | LM Studio |

|---|---|---|---|

| Ease of Use | ✅✅✅ (Full GUI) | ✅ (Command Line, API) | ✅✅✅ (Full GUI) |

| Features | Chat, API Server, Model Mgmt | API Server, Command Line | Chat, Model Discovery, Fine-tuning |

| Philosophy | All-in-one platform | Minimalist tool for developers | “IDE” for exploring LLMs |

| Open Source | Yes | Yes | No |

Jan vs. Ollama: User Experience First

Ollama is a fantastic tool, but it’s primarily aimed at developers who are comfortable with the command line. Jan, on the other hand, offers an out-of-the-box experience with a polished graphical interface. It’s more accessible for beginners.

Jan vs. LM Studio: Two Different Philosophies

LM Studio is excellent for exploring and fine-tuning models, with an interface rich in settings. Jan focuses on a more direct and integrated experience, with the added bonus of being able to transform into an API server and its fully open-source nature—a major advantage over the proprietary LM Studio.

🛠️ Going Further with Jan: The Game-Changing Features

Where Jan gets really interesting is when you explore its advanced functions.

Using Jan as an OpenAI-Compatible API Server

This is THE killer feature for developers. With a single click in the settings, Jan starts a local server on port 1337. This server exposes an API that is 100% compatible with OpenAI’s.

Basically, this means you can take any code that uses the gpt-3.5-turbo or gpt-4 API, just change the base URL to http://localhost:1337/v1, and your application will work with your local model. Without changing anything else. It’s incredibly powerful.

Advanced Customization: “Threads” and “Assistants”

Jan incorporates the concept of “Threads” and “Assistants,” much like the OpenAI API. You can create assistants with specific instructions (e.g., “You are an expert in Python”) to reuse them in different conversations.

Contributing to the Project: The Power of Open Source

The project is very active on GitHub. If you have development skills, feel free to check out the project’s GitHub repository, report bugs, or even propose improvements.

✅ My Take on Jan AI: Should You Adopt It in 2025?

After several weeks of using it daily, both as a chat tool and a local dev server, my verdict is very positive.

The Pros: What I Loved

- Simplicity: It’s by far the easiest tool to get started with for a “ChatGPT-like” experience locally.

- Versatility: The combination of a chat interface and an API server is a game-changer.

- 100% Open-Source: This is an essential criterion for a tool that handles potentially sensitive data.

- Project Responsiveness: Updates are frequent and the community is active.

The Cons: Where It Can Still Improve

- Resource Consumption: Obviously, running an LLM is resource-intensive. The application can sometimes be a bit RAM-hungry.

- Fewer Fine-Tuning Settings: Compared to LM Studio, there are fewer options for “tuning” the model on the fly. It’s a choice for simplicity, but experts might feel limited.

In conclusion, Jan has earned a prime spot on my dock. It has become my go-to for any task that doesn’t require the raw power (and cost) of a GPT-4, but where confidentiality is paramount.

❓ FAQ – Your Questions About Jan AI

What are the best models to use with Jan?

For a good balance of performance and quality, models from the Mistral (7B) and Llama 3 (8B) families are excellent starting points. If you have a powerful setup, you can try larger models like Mixtral 8x7B. The best place to discover more is Hugging Face.

Is Jan AI really free?

Yes, Jan is 100% free and open-source. The only “costs” are the electricity your computer consumes and the storage space for the models.

Can I use Jan without an internet connection?

Absolutely! Once you have downloaded the application and at least one model, you can turn off your Wi-Fi. Jan will work perfectly in a closed circuit on your machine. This is one of its biggest advantages.